We often have people contact us that believe that a claim that their website has been hacked is false because they ran a scanner over and it didn’t find anything. We are not really sure why they don’t ask for the evidence behind the claim and try to see if they can confirm if that is accurate or not instead of running a scanner over the website, but considering they are not doing that it might not be surprising that they are instead doing something that is likely to not produce great results.

One problem is that the even if the scanner is effective at what is attempting to scan for, it may not be able to detect the type of issue that lead to claim that the website is hacked. Let’s say a web host detects a malicious file on the website, well that probably would be be something that a scan of the website’s pages from the outside would never detect.

Another problem is lack of evidence that various scanners are actually effective at what they are attempting to scan for and from our own experience, plenty of evidence that they are not effective. One area where we have seen evidence of that going back many years is with really bad false positives that indicate that these scanners are incredibly crude, so crude in fact that if we weren’t well aware of how bad the security industry is, we would have a hard time believing that they were even occurring. Below are a couple of them in WordPress plugins that we recently ran across that show the current poor state of such tools.

Quttera Web Malware Scanner

The first comes from the plugin Quttera Web Malware Scanner, which has 10,000+ active install according to wordpress.org. In recent thread on the support forum for that someone mentioned getting a false positive for what is quite common code. The plugin will warn when matching “RewriteRule ^(.*)$ h” in a .htaccess file, which would match when do some fairly common rewriting of URLs. Just doing that rewriting is not in any way malicious. The developer’s explanation for that wasn’t that this was a mistake, but that:

We mark it as suspicious because there are multiple malware instances utilizing this technique to steal/redirect traffic from infected websites.

Simply because malware uses common coding isn’t a good reason to flag any usage of it and that will necessarily cause the results of a scanner to be of limited use.

Making it seem like the developer really doesn’t know what they are doing in general, the description for that detection is “Detected suspicious JavaScript redirection”, which makes no sense considering that type of code has nothing to do with JavaScript.

Wordfence Security

The second instance of this involves a much more popular plugin Wordfence Security, which has 2+ million active installs according to wordpress.org, that we have frequently seen people believe is much more capable than it really is (sometimes they ignored evidence right before their eyes to continue to believe that).

A thread on the support forum of the plugin Ultimate Member was recently started with:

Wordfence seems to think there is a malware URL somewhere in the file class-um-mobile-detect.php:

* File contains suspected malware URL: wp-content/plugins/ultimate-member/includes/lib/mobiledetect/class-um-mobile-detect.php

but on comparison, the file’s contents are exactly the same as the latest file offered on https://ultimatemember.com

Can someone comment?

In follow to a question by the developer of the mentioned plugin, the original poster wrote:

I’m using 2.0.23 but as I’ve said the file in question is identical to the one found in the latest version. So as I thought it is a false positive. Maybe Wordfence doubled up on UM after the latest malware exploit.

In reality it was just that Wordfence’s scanner incredibly crude as hinted at by another reply in the thread:

It is caused by the URL: “http://www.vonino.eu/tablets” which was reported to contain malware.

In my file, it’s only mentioned in a comment so I guess it’s safe.

What that is referring to is the following line in the file /wp-content/plugins/ultimate-member/includes/lib/mobiledetect/class-um-mobile-detect.php:

340 | // Vonino Tablets - http://www.vonino.eu/tablets |

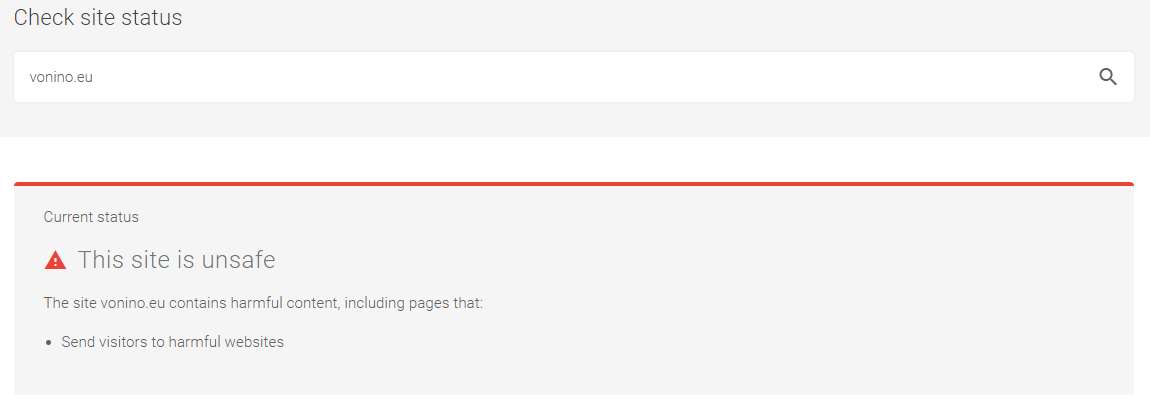

Currently the domain vonino.eu is being flagged by Google as malicious:

That doesn’t in any way make a file that includes the domain in a commented out line in the code, which can’t run, in any way malicious. If the developer’s of Wordfence Security cared at all they could easily avoid that false positive, but considering they can get away with much worse it isn’t surprising they wouldn’t care about that. That also leaves more responsible plugin developers to have to deal with the fallout from those false claims.